This short story is about how we refactored our CI pipeline trying to take the best of docker images and gitlab-ci runners. As I didn’t find much on the Internet about a similar approach I’ll make an attempt at blogging.

Before using docker our average CI pipeline looked like this :

We had a before_script running before linting and tests — a 10-line-long script responsible to pull all the dependencies, build, install and configure the applications. It took between 4 to 10 minutes to run, depending on the dependencies and the build process.

This model was not perfect, but it worked pretty good and provided us what we needed for several years.

One day we decided to switch our microservices from bare-metal to docker containers, so we added docker support in the CI naively by adding a docker_build target before deployment. Our new pipelines looked approximately like this:

At the same time we added some heavy dependencies in our microservice-skeleton and the build lengths increased drastically. Annnnd we started being annoyed by the CI time, sometimes exceeding 20–30 minutes. This was not acceptable for a CI job, developers would not wait that long before context switching. So the pipeline cost went really high on the process.

Thus, we decided to refactor our pipeline process.

Long story short, here are the several needs we’ve identified :

We had to use our dedicated servers running gitlab-ci runners configured as docker executors and our self-hosted Gitlab configured with a private docker registry.

The gitlab documentation told us we had some environment variables available during a job : the commit and ref-related variables (SHA, branch, tag, …), the pipeline related variables (CI_PIPELINE_ID) and the gitlab-related variables (host, registry, tokens, …).

First thing that came to our mind : can we use those variables outside the gitlab-ci script statement ? The answer is yes :)

As you may have already identified, pulling dependencies for each job and building each time was not a very efficient strategy.

We thought about playing with artifacts in order to move the built project from one job to another, but the artifacts were huge and we were not fully convinced by this solution. Then we thought about running our CI jobs inside a previously built docker with the build already shipped in it. Doing so would again reduce the gap between testing and execution, and it seemed pretty funny.

A simple pipeline building an image and running a test inside looked like this :

We chose to name the image after the pipeline ID as it allows us to start again a new pipeline from an old commit without interacting with the old pipeline.

In order to avoid shipping development dependencies in production we needed to have two images, one for the CI and one for production purposes.

The production-ready image was named after the commit-SHA. Using the docker layer model we extended the production images to add development and test tools needed during the CI. Now ARG is supported in Docker FROM so we don’t have to hack with sed to specify to our ci layer the tag of the production image.

First we build a production image :

And then we build a second image from the first :

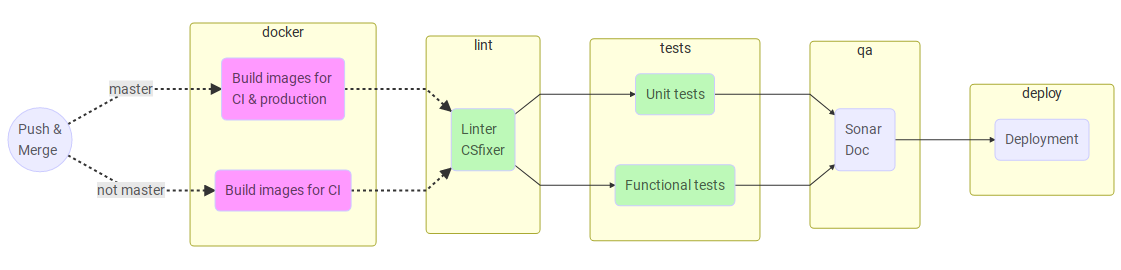

We chose to run some jobs only on master so we made two different build jobs, one for master and the other one for feature branches. This part heavily depends on the git workflow, here we’re using a sightly modified version of nvie’s GitFlow (master and develop are the same branch).

Here is a sample gitlab-ci.yml we use :

A very useful feature we did not anticipated was the ability to retrieve the image running the tests and debugging into it when a pipeline failed. A simple docker run -it foo.bar:ci/$CI_PIPELINE_ID /bin/sh permitted to be ready to debug in seconds.

It may be interesting to think about the tradeoffs we made. We only encountered a few issues when rolling out the new CI model.

The first one is that — by the time I write these lines — Gitlab does not offer an api to delete tags. It’s currently not possible to add a final task in the end of the pipeline to clean the tag from the registry and allow the garbage collector to do his job. Hopefully this project exists and you just have to expose two endpoints with a flask API or any tool you like.

The second and major tradeoff is the use of docker in docker. We did quick and dirty to start with but this comes with some risks. This might be fixed by using the host docker daemon and mounting the daemon socket into the containers. Another way is to build images jessfraz’s style.

The third tradeoff is the intensive use of docker registry. Now we need to monitor its IO closely. As we are relying more and more on it, the bill might go up because of network fees, and if the registry goes down we’ll be barely able to test and deliver any code.

In the end we approximately measured a global 50% speed improvement. Now we are happy and the CI-flow is pretty understandable.

I’d like to confess, I hid some stuff in order to keep it clear and straightforward. The main subject I escaped was integration testing. In one way or another you will have to make your components work together.

I guess there is two ways of doing this :

I’d like to thank Matters and the Ubeeqo team who empowered me during my internship. We experimented with really cool stuff and thanks to their mentoring I had the confidence to stand up and present my work.